- 1Arup, Melbourne, VIC, Australia

- 2School of Psychology, Deakin University, Melbourne, VIC, Australia

- 3Centre for Leadership Advantage, Melbourne, VIC, Australia

- 4Insync, Melbourne, VIC, Australia

Boards of Directors that function effectively have been shown to be associated with successful organizational performance. Although a number of measures of Board functioning have been proposed, very little research has been conducted to establish the validity and reliability of dimensions of Board performance. The aim of the current study was to validate the measurement properties of a widely-used model and measure of Board performance. Exploratory Factor Analysis (EFA) and Confirmatory Factor Analysis (CFA) were conducted on online survey data collected from 1,546 board members from a range of Australian organizations. The analyses yielded 11 reliable factors: (1) effective internal communication and teamworking (2) effective leadership by the Chair (3) effective committee leadership and management (4) effective meeting management and record keeping, (5) effective information management (6) effective self-assessment of board functioning (7) effective internal performance management of board members (8) clarity of board member roles and responsibilities, (9) risk and compliance management (10) oversight of strategic direction, and (11) remuneration management. These dimensions to a large extent correspond to previously suggested, but not widely tested, categories of effective Board performance. Despite self-reported data and a cross-sectional design, tests of common method variance did not suggest substantive method effects. The research makes significant contributions to the corporate governance literature through empirical validation of a measure shown to reliably assess 11 discrete dimensions of Board functioning and performance. Practical and theoretical implications, study limitations and future research considerations are presented.

Introduction

One of the most important factors underpinning successful organizational performance is the functioning of its board of directors (Machold and Farquhar, 2013; Bezemer et al., 2014). Boards of directors are responsible for the oversight of systems and processes that direct, control, and govern an organization's strategy, leadership decisions, regulatory compliance, and overall performance (Mowbray, 2014). Although board effectiveness has often been evaluated in terms of financial metrics such as return to shareholders, return on investment or return on assets (Dalton et al., 1998; Erhardt et al., 2003; Carter et al., 2010), effective boards also effectively oversee and challenge management's strategic, compliance, and operational decisions (Orser, 2000; Babić et al., 2011). Effective corporate governance also extends to framing, setting and monitoring an organization's values and culture (Adams, 2003; Ritchie and Kolodinsky, 2003), and ensuring that business decisions and practices are conducted ethically, fairly, and comply with community and regulatory standards (Nicholson and Kiel, 2004; Brown, 2005).

The responsibility for a large number of corporate failures, scandals, and ineffective business transformations has been attributed to ineffective board functioning (Carpenter et al., 2003; Thomson, 2010). The collapse of Lehman Brothers during the global financial crisis in 2008 is an often-cited example of how an ineffective board failed to ensure appropriate risk settings and policies to monitor corporate practice (Kirkpatrick, 2009). The press and regulatory authorities from across the world continue to expose corporate scandals (e.g., Wells Fargo Bank, Volkswagen, Australian Mutual Provident Society) that have been attributed to Board mismanagement and ineffectiveness (e.g., Cossin and Caballero, 2016).

Conceptualizing and Measuring Board Functioning

Given corporate sensitivities to the potential impact of less than flattering information about board functioning being made available to external stakeholders, it has been ‘extremely difficult for researchers to measure the task performance of boards in ways that are both reliable and comprehensive’ (Forbes and Milliken, 1999, p. 492). In practice, most boards use self-developed or consultant developed check-lists to self-assess the effectiveness of the Chair, the board, and board members over a range of composition, procedural, group process and performance factors (Nicholson and Kiel, 2004; Chen et al., 2008). However, very little empirical research has been conducted aimed at identifying and statistically validating the different dimensions of board functioning.

Among the existing frameworks developed to understand board behavior, Bradshaw et al. (1992) proposed that board functioning is comprised of three key dimensions: structure, process and performance. Nicholson and Kiel's 2004 framework consists of inputs (e.g., company history, legal constraints, environmental feedback), intellectual capital (e.g., knowledge, skills, industry experience, external networks, internal relationships, board culture and norms) and board roles and behaviors (e.g., controlling, monitoring, advising, resourcing) that dynamically interact to influence organizational performance. Nicholson and Kiel argued that all components and sub-components of their model need to be in alignment for boards to operate and perform effectively. Along similar lines, Beck and Watson's 2011 maturity model proposes that board effectiveness be assessed in terms of board competencies (e.g., knowledge and skills), board structures (e.g., policies, processes and procedures), and board behavior (e.g., norms, board-management relations, demonstrated values).

In terms of how board processes and functioning are measured and evaluated, a number of performance indices have been proposed and developed. Brown (2005), for example, recommended self-report surveys to evaluate individual performance behaviors such as attendance, the quality of that attendance (i.e., coming prepared to board meetings), the constructive contribution of board members (i.e., to conversations and the business of the board), and the extent to which board members have the necessary knowledge and skills to perform their roles. Brown (2005) also recommended that Board members evaluate overall board performance and organizational performance. Similarly, Beck and Watson suggested that board members self-rate their performance over a number of dimensions to determine whether their stage of maturity could best be described as “baseline,” “developing,” “consistent,” “continuous learning,” or “leading practice.” Neither of the self-assessment surveys proposed by Brown and by Beck and Wilson has been tested for measurement reliability and validity.

Leblanc and Gillies (2003, 2005), based on qualitative research, published a widely used model and measure of board functioning. According to Leblanc and Gillies (2003), an effective board “… needs to have the right board structure, [be] supported by the right board membership, and engaged in the right board processes” (p. 9). As such LeBlanc and Gillies' framework suggests that effective boards are defined by what the board does (roles), who is on the board (composition), how the board operates (structure and processes), and the direction the organization takes as a result of advice from the board (strategy and planning). Leblanc and Gillies' (2005) measure of board functioning consists of 120 statements that board members rate to derive scores for 10 scales or sub-scales represented in their model. Board structure and board composition are measured as unidimensional 12-item scales. Board processes are measured by five 12-item sub-scales: leadership by the Chair; board member behavior and dynamics; board and management relationships; meeting management and processes; and, information management and internal reporting. Board tasks are measured by three 12-item sub-scales: direction, strategy and planning; CEO, organizational performance and compensation; and, risk assurance and external communication. Although widely used, the measurement properties of the measure have not been widely validated. As such, the present research aims to apply validation processes to a measure of board effectiveness and to therefore establish psychometrically defensible dimensions of board functioning that can be used to reliably evaluate board performance.

Method

Participants and Sampling Procedure

A large Australian consulting firm specializing in survey research and consulting collected 9 years of board self-evaluation data using a modified version of Leblanc and Gillies' (2005) Board Effectiveness Survey. The data were collected for the purposes of measuring board effectiveness for clients, internal research, norming and marketing. Participants were emailed invitations to complete the survey online. Participants were informed that the survey was anonymous and that the data they provided could be used by the consulting firm for consulting and research purposes. Use of the data was approved by the second author's university ethics committee. The approval was granted in accord with the Australian Government National Statement on Ethical Conduct in Human Research (2007) and on the basis that the research involves the use of existing and non-identifiable data collected with no foreseeable risk of harm or discomfort for participants.

The sample consisted of 1,546 board members from a variety of industries; 73% male and 24% female. Ages of respondents varied, with 45% ranging from 55 to 64 years of age, 34% from 45 to 55 years, 12% from 35 to 44 years, 7% being 64 years and older, and 2% being 35 years and younger. Participants were members of an audit committee (37%), a remuneration committee (22%), a nominations committee (13%), or an “other” committee (28%). Experience as a board member ranged from more than 9 years (54%), 5–9 years (26%), 2–4 years (14%), and 1 year or less (6%). Most respondents (86%) were non-executive directors (i.e., no executive role in the organization and serving as independent directors), and the remaining 14% were executive directors.

Measures

As previously noted, Leblanc and Gillies' (2005) Board Effectiveness Survey is proposed to measure four broad dimensions of board functioning: board structure and role clarity (“what the board is structured as”); board composition (“who is on the board”); board process (i.e., “how the board works”); and board tasks (i.e., “what the board does”). The measure consists of ten scales or sub-scales—(1) board structure (12 items); (2) board composition (12 items); board processes (five 12-item sub-scales)—(3) board and committee leadership, (4) board member behavior and dynamics, (5) board and management relationship, (6) meetings, agenda and minutes, (7) information and internal reporting; board tasks (three 12-item sub-scales)—(8) direction, strategy and planning, (9) CEO, organizational performance and compensation, and, (10) risk, assurance and external communication. The data contained no outcomes measures (e.g., self-ratings of Board performance; employee ratings of Board effectiveness) and did not enable links with objective organizational performance (e.g., share price, reputational indices, etc.). As such, the external validity of the measures could not be assessed.

Participants were asked to ‘Please indicate the extent to which you agree with each of the following statements’, with reference to the 120 survey statements, using a 7-point Likert scale, ranging from (1) strongly disagree to (7) strongly agree. Example items are shown in the results section.

Results

Exploratory Factor Analysis

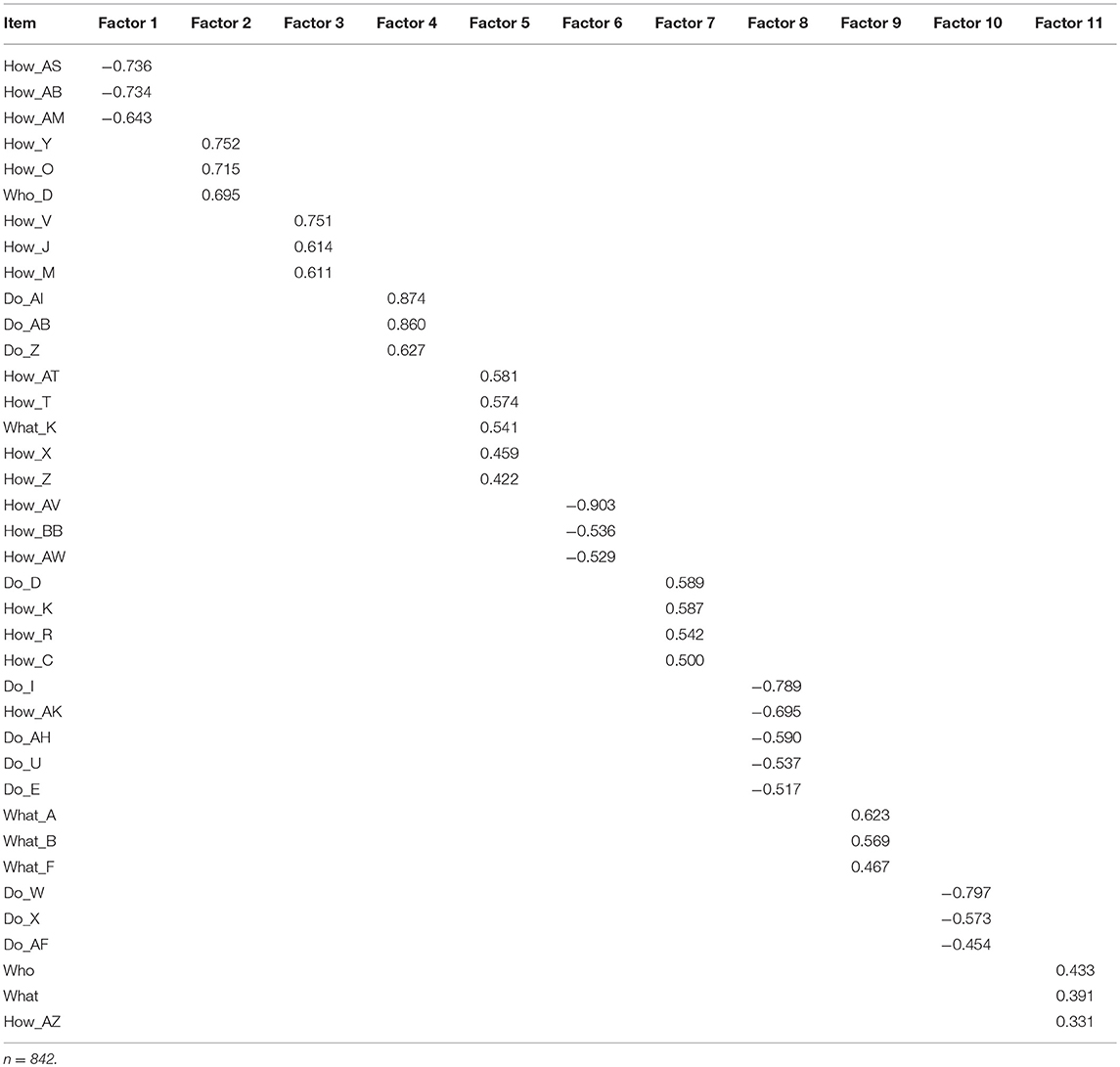

Given the adapted version of the survey has not previously been validated, the data were randomly split into roughly equal halves so the results of the Exploratory Factor Analysis (EFA) conducted on one half of the data could be cross-validated with Confirmatory Factor Analysis (CFA) using the other half of the data (Tabachnick and Fidell, 2014). The Kaiser-Meyer-Olkin (KMO) measure verified sampling adequacy for the EFA (KMO = 0.924) and Bartlett's Test of Sphericity [χ2(3, 745) = 55, p < 0.001] supported the factorability of the data. The EFA (n = 842), with maximum likelihood (ML) extraction and oblimin rotation, yielded 14 factors explaining 56% of the variance. Given that a minimum of three items is needed to reliably define a construct (Bagozzi and Yi, 1988; Jöreskog and Sörbom, 1993), the items for factors that had < 3 items were not retained for further analyses. Similarly, items that had loadings < 0.3 or that cross-loaded across factors >0.3 were not retained for subsequent analyses (Tabachnick and Fidell, 2014).

Table 1 shows that after deleting three two-item factors, items loading lower than 0.3, and three cross-loading items, a subsequent EFA yielded 11 relatively clean factors, with no cross loading items, explaining 58% of the variance. The 11 factor solution consisted of: effective board communication, teamwork and dynamics; board self-assessment; effective leadership by the chair; remuneration management; committee chair leadership and management; meeting management; information management; risk and compliance management; role clarity; strategic oversight and direction; and performance management of board members. The 11 factors, to a large extent, corresponded to Leblanc and Gillies' (2005) “how,” “do,” and “what” factors. Six of the 11 factors were comprised of exclusively or predominantly “how” items, identifying discrete process factors that suggest how a board can best operate effectively. Three of the factors were exclusively or predominantly “do” factors; one was a “what” factor, and one consisted of a who, what and a how item. The analysis did not extract a clear “who” factor.

Confirmatory Factor Analysis

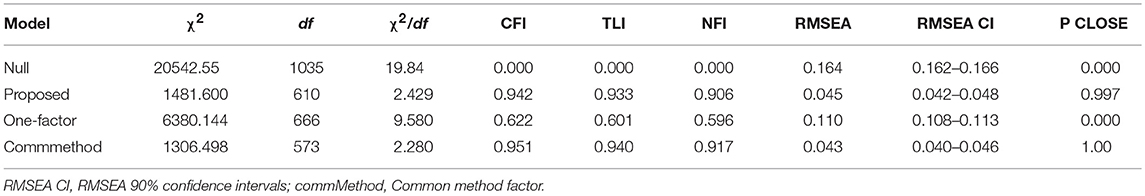

The generalizability of the EFA solution was tested with CFA using the second half of the data (n = 704). As per recommendations by Kline (2011), model fit was evaluated using a range of fit indices: chi-square, chi-square to degrees of freedom ratio (χ2/df), comparative fit index (CFI), Tucker-Lewis index (TLI), normed fit index (NFI), root mean square error of approximation (RMSEA), RMSEA confidence intervals, and p of close fit (PCLOSE). Researchers (Browne et al., 1993; Hu and Bentler, 1999; Schreiber et al., 2006) have suggested cutoff levels for determining model fit: χ2/df ≤ 2, CFI > 0.95, TLI > 0.95, NFI > 0.95, RMSEA < 0.05 with RMSEA CI ≤ 0.06 and PCLOSE > 0.05. However, NFI, CFI and TLI values between 0.90 and 0.95 have also been argued to demonstrate good fit (Marsh et al., 1996). Global, or absolute, measures of fit such as Akaike's Information Criterion (AIC), Bayesian Information Criterion (BIC), and Consistent Akaike's Information Criterion (AIC) have also been used to compare non-nested models from the same sample that differ in complexity (Burnham and Anderson, 2002). Smaller AIC, BIC, and CAIC values suggest better fit.

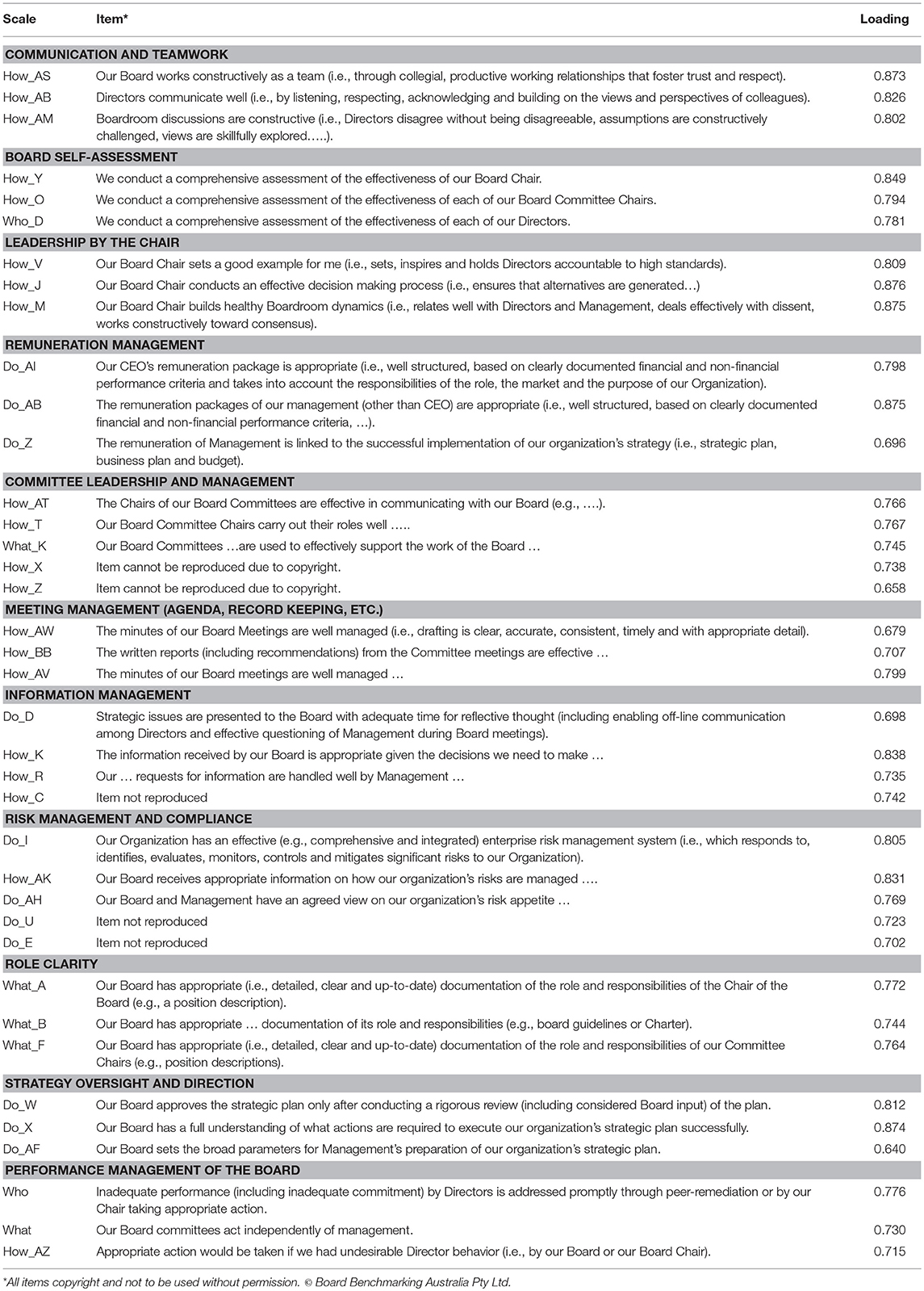

As shown in Table 2, the proposed model, derived from the EFA, yielded acceptable fit: χ2 ratio = 2.429, TLI = 0.933; CFI = 0.942; NFI = 0.906; RMSEA = 0.045 (0.042–0.048); PCLOSE = 0.997. All standardized loadings (ranging between 0.43 and 0.88) were significant (p < 0.001), and only four of the items had loadings < 0.70. Table 2 also shows that a one-factor model, included for comparison purposes, did not yield acceptable fit. Example items and associated factor loadings are shown in Table 3.

Given that the data were self-report data, procedures for testing common method variance (CMV) were conducted (Podsakoff et al., 2012). As such, the fit of the proposed model was compared to the fit of a CMV model whereby parameters from an additional common method factor were specified to load on each of the items. Although Table 2 show that the fit statistics for the CMV model were marginally better than for the proposed model, the global fit indices (AIC, BIC and CAIC) showed that the fit of the proposed model (AIC = 1743.598, BIC = 2340.536, CAIC = 2471.536) was better than the fit of the CMV model (AIC = 1642.498, BIC = 2408.037, CAIC = 2576.037). Global indices are particularly useful when comparing non-nested models from the same data set (Browne and Cudeck, 1989; Raftery, 1995). Furthermore, the standardized loadings for only two of the items decreased more than 0.16 when the common method factor was included in the model. The two items were “How_O” and “Who-D,” both being “Board Self-Assessment” items (see Table 3), with decreases of 0.42 and 0.35, respectively. Otherwise, given that the standardized loading decreased, on average, a very modest 0.07 across the 38 items, and that all factor loadings remained statistically significant (p < 0.001) after the inclusion of the common method factor, the influence of method effects can, to a large extent, be discounted (Elangovan and Xie, 2000; Johnson et al., 2011; Podsakoff et al., 2012).

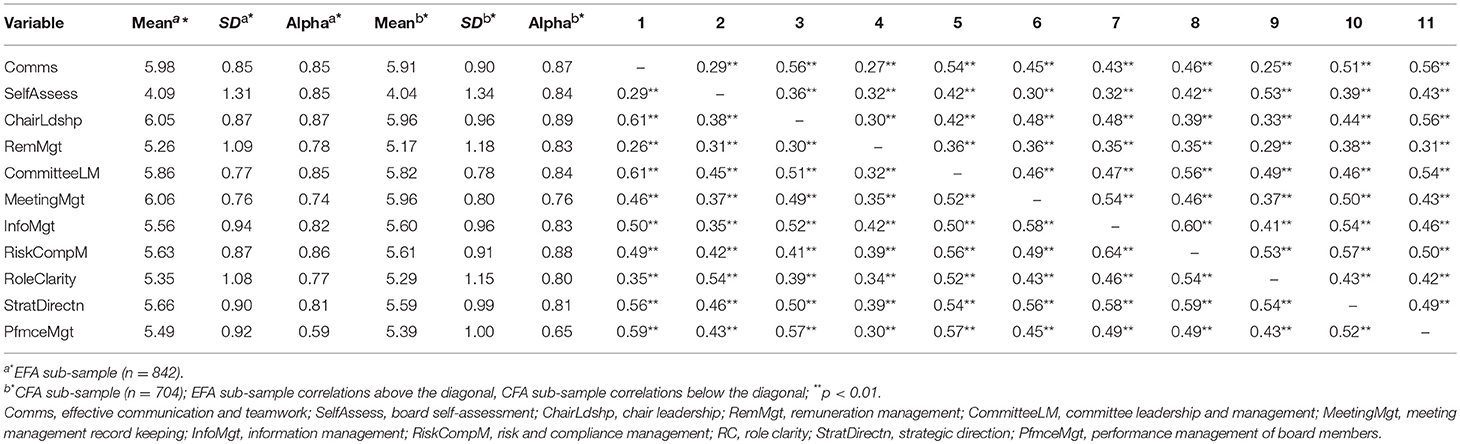

The means, standard deviations, correlation coefficients and Cronbach's alpha reliabilities for the proposed measurement model are presented in Table 4. For comparison purposes, the means, standard deviations, alpha reliabilities, and correlation coefficients derived from the EFA sub-sample (n = 842) are also provided. Table 4 shows the means, standard deviations, alphas, and correlation coefficients across both sub-samples were very similar. With the exception of “board performance management” (α1 = 0.59; α2 = 0.65) and “meeting management” (α1 = 0.74, α2 = 0.76), all constructs yielded excellent internal reliability, with alphas ranging from 0.80 to 0.89 (Nunnally, 1978). Additionally, none of the correlations among the 11 factors (ranging from r = 0.25–0.64) exceeded 0.70, and therefore do not suggest the presence of any higher-order factors (Christiansen et al., 1996).

Table 4. Means, standard deviations, internal consistencies, Cronbach's alpha in italics on diagonal) and correlations among the first-order variables in the re-specified model for the EFA validation sample (n = 842) and the CFA cross-validation sub-sample (n = 704).

Discussion

The aim of the study was to validate an adapted version of Leblanc and Gillies' (2005) measure of board effectiveness. Given that there has been limited empirical validation of measures of Board effectiveness, the research contributes insights into the dimensions by which Board effectiveness can reliably be measured, and therefore monitored, managed and improved.

Validation of the measure was conducted on a large sample using both exploratory and confirmatory factor analytic procedures. The results identified 11 dimensions and, as such, support the arguments that board functioning is complex and multi-faceted (Nicholson and Kiel, 2004). In support of their content validity, the 11 dimensions largely correspond to those previously identified by academics and professional bodies (Kiel and Nicholson, 2005; Leblanc, 2005; Australian Institute of Company Directors, 2016). The 11 dimensions identified include: (1) effective internal communication and teamwork; (2) effective self-assessment; (3) effective leadership by the Chair: (4) effective committee leadership and management; (5) effective meeting management and record keeping; (6) effective information management; (7) clarity of board member roles and responsibilities; (8) effective internal performance management of members of the board; (9) effective oversight of strategic direction; (10) remuneration management; and (11) risk management and compliance. Table 3 shows that the 11 factors, to a large extent, correspond to Leblanc and Gillies' (2005) “how,” “do,” and “what” factors but did not replicate a clear “who” factor.

Six of the 11 factors corresponded exclusively or predominantly to Leblanc and Gillies (2005) “how” items, reflecting discrete process factors for how a board can operate effectively. Communication and teamwork refer to the degree to which the board works as a team, board members trust and respect each other, and board discussions are positive, productive and constructive. Board self-assessment relates to the degree to which the board assesses the effectiveness of the chair, committee chairs and individual directors. Leadership effectiveness of the chair refers to the way in which the chair effectively facilitates discussion, leads effective decision-making, role models positive leadership, and encourages constructive interpersonal dynamics and relationships between the members of the board. Information management refers to the degree to which board members perceive that important information (particularly from management) is presented to them in a timely, well-managed and appropriate manner. Committee leadership and management refers to the leadership role the committee chairs play in representing their committees at board meetings and the degree to which committee chairs effectively facilitate information sharing and foster positive working relationships with management. Meeting management refers the extent to which the board has administrative processes in place to effectively manage the board's agendas, meetings, minutes, and the distribution of written reports.

The three dimensions that most closely corresponded to Leblanc and Gillies' (2005) “do” factors included remuneration management; risk management and compliance; and oversight of strategic direction. Remuneration management refers to how effectively the board carries out its role with regards to setting appropriate remuneration packages for the CEO and management, and the degree to which remuneration is linked to the successful implementation of organizational strategy. Risk management and compliance refers to the extent to which the board perceives the organization as having effective internal control systems and procedures in place to identify risk factors, and to ensure they are brought to the attention of the board, and appropriately mitigated. Oversight of strategic direction refers to the degree to which the board has an understanding of the actions required to execute the organization's strategic plan.

The dimension that most closely corresponded to Leblanc and Gillies' (2005) “what” factor focused on establishing role clarity. The dimension refers to the extent the board has processes and documentation in place to clearly define the respective responsibilities of the board, the chair and committee chairs. The eleventh dimension consisted of an amalgam of LeBlanc and Gillies' who, what and how items, and refers to the extent the board sets clear expectations about performance and takes appropriate action when inappropriate or undesirable board behaviors are displayed.

The results of the current study suggest a revised, more differentiated and statistically defensible framework for evaluating board effectiveness. In contrast to the model and measures proposed by Leblanc and Gillies (2005), the results suggest 11 discrete dimensions of board functioning that can reliably be assessed using three to five items per dimension. Short reliable measures can be less burdensome for survey respondents because they require the investment of less time and effort (Rolstad et al., 2011; Schaufeli, 2017). Furthermore, rather than supporting broad and generic taxonomies such as “what, who, what and do” (Leblanc and Gillies, 2005) or “competencies, structures, and behaviors” (Beck and Watson, 2011), the results support the use of more fine-grained and differentiated dimensions. The relatively modest correlations between the 11 factors did not suggest they could usefully be grouped within more generic or higher-order factors (Christiansen et al., 1996).

As previously noted, the current findings to a large extant confirm or overlap with existing conceptualisations of board performance and functioning. Six of the dimensions broadly overlap with Leblanc and Gillies' five “how” factors. The communication and teamwork, role clarity, and strategic oversight factors largely correspond to Beck and Watson's 2011 “board competencies.” Similarly, board self-assessment, remuneration management, risk management and compliance, and information management align with Beck and Watson's “board behaviors.” Such overlap and alignment was expected, and provides for cross-validation of the importance and generalizability of the 11 dimensions identified. However, in contrast to existing models and measures, the present results are supported by quite rigorous statistical analyses.

Practical Implications

The model presented in the current study suggests that boards are complex and multi-faceted and must focus on numerous diverse activities to work effectively. The development of a valid measure of board effectiveness has clear practical implications. A valid and reliable measure can be used with confidence internally by Board members to help them assess and improve their own functioning and effectiveness. The use of reliable measures enable confident assessments of whether self-evaluations change over time and in which direction. Similarly, external consultants can use the dimensions and the measure to audit board effectiveness and to diagnose where their client organizations can best develop and change. The large sample size and the variety of industries included in the current sample also lend confidence to the generalizability of the measure.

Limitations

While the current study has provided new insights into important elements contributing to board effectiveness, some limitations need to be acknowledged. First, the results relied on self-report measures and as such are subject to the threat of “common method variance” (CMV). CMV refers to the variance in the proposed model that is a result of the measurement method, as opposed to true variance in the statistical model (Podsakoff et al., 2003). However, given that the measurement model demonstrated acceptable fit to the data, given that the correlations between the measured constructs were moderate and varied quite considerably, given the very modest average reduction in the standardized loadings after a common methods factor was included, and given that all the factor loadings remained statistically significant after the common methods factor was modeled, the issue of CMV appears not to be overly problematic. Nevertheless, future research could usefully incorporate multi-rater or longitudinal data points to help address the risk of CMV. Second, no objective data were collected concerning the financial performance of the organizations sampled. Thus, although the current model and measure suggests a range of dimensions that are important for effective Board functioning, their impact or success in delivering financial or other objective metrics could not be determined. Finally, given the modest reliability coefficient for the “Performance Management” dimension, additional items should be developed to more precisely define the construct.

Considerations for Future Research

As noted above, future research could usefully be directed toward validating the identified dimensions of effective board functioning against objective criteria. Previous research has shown positive associations between board practices and organizational performance (e.g., Drobetz et al., 2004), so determining the relative contributions of the 11 factors to such performance would be instructive. Subjective outcome measures of board satisfaction and performance could also usefully be developed and validated. Future research might then employ multi-level model perspectives and methods to better capture the amount of variance in outcomes that can be attributed to the boards themselves as opposed to the variance that can be attributed to individuals across all boards. Additional research could also be designed that draws more closely from the team effectiveness literature to better understand how boards function and the impact on overall business performance. Payne et al. (2009), for example, found that team-based attributes and practices were associated with increased financial performance assessed in terms of return on assets (ROA), earnings per share (EPS), and return on sales (ROS).

It is widely acknowledged that boards are under increasing pressure to ensure sustained organizational success and competitive advantage (Buchwald and Thorwarth, 2015). Dimensions of board functioning might therefore usefully focus on Board member competence and capability with respect to understanding the implications of new technologies and more agile ways of working. Future researchers will hopefully be able to develop valid and reliable measures of such dimensions that build on the 11 factor model presented in this study.

Overall, future research is needed to establish with confidence whether the psychometrically defensible measures reported here are indeed associated with, or predictive of, performance outcomes. The present results suggest that using the dimensions and measures described, if reliable outcome measures can be accessed, then such future research can be conducted and interpreted with confidence.

Conclusion

This study aimed to validate an adapted version of Leblanc and Gillies' (2005) measure of board effectiveness. Overall, the results supported a multidimensional conceptualization of board effectiveness. Eleven discrete dimensions were identified that will help boards and other stakeholders reliably assess the level of board functioning. This research makes significant contributions to the current corporate governance literature, as it presents a large sample empirically-validated measure that can be used with confidence in organizational settings.

Author Contributions

SA, SLA, MD, and NB all contributed substantially to the conception and design of the research, interpretation of the findings, and preparation of the manuscript. SA and SLA contributed to the analysis of the data.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Adams, R. (2003). Facing up to board convict: a negative-pronged path to convict resolution. Assoc. Manag. 55, 56–63.

Australian Institute of Company Directors (2016). Improving Board Effectiveness: Board Performance. Available online at: https://aicd.companydirectors.com.au/~/media/cd2/resources/director-resources/director-tools/pdf/05446-2-2-director-tools-bp-improving-board-effectiveness_a4_web.ashx (Accessed June 26, 2018).

Babić, V. M., Nikolić, J. D., and Erić, J. M. (2011). Rethinking board role performance: towards an integrative model. Econ. Ann. 16, 140–162. doi: 10.2298/EKA1190140B

Bagozzi, R. P., and Yi, Y. (1988). On the evaluation of structural equation models. J. Acad. Market. Sci. 16, 74–94. doi: 10.1007/BF02723327

Beck, J., and Watson, M. (2011). Transforming board evaluations—The board maturity model. Keep. Good Companies 586–592.

Bezemer, P. J., Nicholson, G. J., and Pugliese, A. (2014). Inside the boardroom: exploring board member interactions. Qual. Res. Account. Manag. 11, 238–259. doi: 10.1108/QRAM-02-2013-0005

Bradshaw, P., Murray, V., and Wolpin, J. (1992). Do nonprofit boards make a difference? An exploration of the relationships among board structure, process and effectiveness. Nonprofit Volunt. Sector Q. 21, 227–249. doi: 10.1177/089976409202100304

Brown, W. A. (2005). Exploring the association between board and organizational performance in nonprofit organizations. Nonprofit Manag. Leadership 15, 317–339. doi: 10.1002/nml.71

Browne, M. W., and Cudeck, R. (1989). Single sample cross-validation indices for covariance structures. Multivariate Behav. Res. 24, 445–455. doi: 10.1207/s15327906mbr2404_4

Browne, M. W., Cudeck, R., and Bollen, K. A. (1993). Alternative ways of assessing model fit. Sage Focus Editions 154, 136–136.

Buchwald, A., and Thorwarth, S. (2015). Outside Directors of the Board, Competition and Innovation. Düsseldorf Institute for Competition Economics (DICE) Discussion Paper, No. 17. Dusseldorf University Press.

Burnham, K. P., and Anderson, D. R. (2002). Model Selection and Multimodel Inference: A Practical Information-Theoretic Approach, 2nd Edn. New York, NY: Springer.

Carpenter, M. A., Pollock, T. G., and Leary, M. M. (2003). Testing a model of reasoned risk-taking: governance, the experience of principals and agents, and global strategy in high-technology IPO firms. Strat. Manag. J. 24, 803–820. doi: 10.1002/smj.338

Carter, D. A., D'Souza, F. P., Simkins, B. J., and Simpson, W. G. (2010). The gender and ethnic diversity of us boards and board committees and firm financial performance. Corp. Govern. Int. Rev. 18, 396–414. doi: 10.1111/j.1467-8683.2010.00809.x

Chen, G., Hambrick, D. C., and Pollock, T. G. (2008). Puttin' on the Ritz: pre-IPO enlistment of prestigious affiliates as deadline-induced remediation. Acad. Manag. J. 51, 954–975. doi: 10.5465/amj.2008.34789666

Christiansen, N. D., Lovejoy, M. C., Szynmanski, J., and Lango, A. (1996). Evaluating the structural validity of measures of hierarchical models: an illustrative example using the social-problem solving inventory. Edu. Psychol. Meas. 56, 600–625. doi: 10.1177/0013164496056004004

Cossin, D., and Caballero, J. (2016). A Practical Perspective: The Four Pillars of Board Effectiveness. Lausanne: IMD Global Board Center.

Dalton, D. R., Daily, C. M., Ellstrand, A. E., and Johnson, J. L. (1998). Meta-analytic reviews of board composition, leadership structure, and financial performance. Strat. Manag. J. 19, 269–290.

Drobetz, W., Schillhofer, A., and Zimmermann, H. (2004). Corporate governance and expected stock returns: evidence from germany. Eur. Fin. Manage. 10, 267–293. doi: 10.1111/j.1354-7798.2004.00250.x

Elangovan, A. R., and Xie, J. L. (2000). Effects of perceived power of supervisor on subordinate work attitudes. Leadership Dev. J. 21, 319–328. doi: 10.1108/01437730010343095

Erhardt, N. L., Werbel, J. D., and Shrader, C. B. (2003). Board of director diversity and firm financial performance. Corp. Govern. Int. Perspect. 11, 102–111. doi: 10.1111/1467-8683.00011

Forbes, D. P., and Milliken, F. J. (1999). Cognition and corporate governance: understanding boards of directors as strategic decision-making groups. Acad. Manage. Rev. 24, 489–505. doi: 10.5465/amr.1999.2202133

Hu, L., and Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: conventional criteria versus new alternatives. Struct. Equation Model. 6, 1–55. doi: 10.1080/10705519909540118

Johnson, J., Rosen, C. C., and Djurdjevic, E. (2011). Assessing the impact of common method variance on higher order multidimensional constructs. J. Appl. Psychol. 94, 744–761. doi: 10.1037/a0021504

Jöreskog, K. G., and Sörbom, D. (1993). LISREL 8: Structural Equation Modelling With the Simplis Command Language. Hillsdale, NJ: Lawrence Erlbaum Associates.

Kiel, G. C., and Nicholson, G. J. (2005). Evaluating boards and directors. Corp. Govern. Int. Rev. 13, 613–631. doi: 10.1111/j.1467-8683.2005.00455.x

Kirkpatrick, G. (2009). The Corporate Governance Lessons from the Financial Crisis. Financial Market Trends. Paris, OECD Publication.

Kline, R. B. (2011). Principles and Practice of Structural Equation Modeling. New York, NY: Guilford press.

Leblanc, R. (2005). Assessing board leadership. Corp. Govern. Int. Rev. 13, 654–666. doi: 10.1111/j.1467-8683.2005.00457.x

Leblanc, R., and Gillies, J. (2003). The coming revolution on corporate governance. Ivey Bus. J. 1:112. doi: 10.1111/j.1467-8683.1993.tb00023.x

Machold, S., and Farquhar, S. (2013). Board task evolution: a longitudinal field study in the UK. Corp. Govern. Int. Rev. 21, 147–164. doi: 10.1111/corg.12017

Marsh, H. W., Balla, J. R., and Hau, K. T. (1996). Advanced Structural Equation Modeling: Issues and Techniques. Mahwah, NJ: Erlbaum.

Mowbray, D. (2014). “The board vs executive view of board effectiveness and its influence on organizational performance,” in Proceedings of The European Conference on Management, Leadership and Governance, ed V. Grozdanic (Zagreb: Academic Conferences and Publishing International Limited), 183–191.

Nicholson, G. J., and Kiel, G. C. (2004). Transforming board evaluations - the board maturity model. Corp. Govern. Int. J. Bus. Soc. 4, 5–23. doi: 10.1108/14720700410521925

Orser, B. (2000). Creating High-Performance Organizations: Leveraging Women's Leadership. Conference Board of Canada, Ottawa.

Payne, G., Benson, G., and Finegold, D. (2009). Corporate board attributes, team effectiveness and financial performance. J. Manag. Stud. 46, 704–731. doi: 10.1111/j.1467-6486.2008.00819.x

Podsakoff, P. M., MacKenzie, S. B., Lee, J. Y., and Podsakoff, N. P. (2003). Common method biases in behavioral research: a critical review of the literature and recommended remedies. J. Appl. Psychol. 88, 879–903. doi: 10.1037/0021-9010.88.5.879

Podsakoff, P. M., MacKenzie, S. B., and Podsakoff, N. P. (2012). Sources of method bias in social science research and recommendations on how to control it. Ann. Rev. Psychol. 63, 539–569. doi: 10.1146/annurev-psych-120710-100452

Raftery, A. E. (1995). “Bayesian model selection in social research,” in Sociological Methodology. Vol. 25. eds P. V. Marsden (Oxford: Blackwell), 11–163.

Ritchie, W. J., and Kolodinsky, R. W. (2003). Nonprofit organization financial performance measurement: an evaluation of new and existing financial performance measures. Nonprofit Manag. Leadership 13, 367 381. doi: 10.1002/nml.5

Rolstad, S., Adler, J., and Rydén, A. (2011). Response burden and questionnaire length: is shorter better? A review and meta-analysis. Value Health 14, 1101–1108. doi: 10.1016/j.jval.2011.06.003

Schaufeli, W. B. (2017). Applying the job demands-resources model: a ‘how to’ guide to measuring and tackling work engagement and burnout. Organ. Dynam. 46, 120–132. doi: 10.1016/j.orgdyn.2017.04.008

Schreiber, J. B., Nora, A., Stage, F. K., Barlow, E. A., and King, J. (2006). Reporting structural equation modeling and confirmatory factor analysis results: a review. J. Edu. Res. 99, 323–338. doi: 10.3200/JOER.99.6.323-338

Tabachnick, B. G., and Fidell, L. S. (2014). Using Multivariate Statistics, 6th Edn. Harlow: Pearson Education Limited.

Keywords: board of directors, board of directors effectiveness, measures of effectiveness, validity and reliability of dimensions board functioning, validity and reliability of dimensions of board effectiveness

Citation: Asahak S, Albrecht SL, De Sanctis M and Barnett NS (2018) Boards of Directors: Assessing Their Functioning and Validation of a Multi-Dimensional Measure. Front. Psychol. 9:2425. doi: 10.3389/fpsyg.2018.02425

Received: 18 August 2018; Accepted: 19 November 2018;

Published: 04 December 2018.

Edited by:

Radha R. Sharma, Management Development Institute, IndiaReviewed by:

Serena Cubico, Università degli Studi di Verona, ItalyMoon-Ho R. Ho, Nanyang Technological University, Singapore

Copyright © 2018 Asahak, Albrecht, De Sanctis and Barnett. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Simon L. Albrecht, simon.albrecht@deakin.edu.au

Shamiran Asahak

Shamiran Asahak Simon L. Albrecht

Simon L. Albrecht Marcele De Sanctis3

Marcele De Sanctis3 Nicholas S. Barnett

Nicholas S. Barnett